the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

What could we learn about climate sensitivity from variability in the surface temperature record?

James D. Annan

Julia C. Hargreaves

Thorsten Mauritsen

Bjorn Stevens

We examine what can be learnt about climate sensitivity from variability in the surface air temperature record over the instrumental period, from around 1880 to the present. While many previous studies have used trends in observational time series to constrain equilibrium climate sensitivity, it has also been argued that temporal variability may also be a powerful constraint. We explore this question in the context of a simple widely used energy balance model of the climate system. We consider two recently proposed summary measures of variability and also show how the full information content can be optimally used in this idealised scenario. We find that the constraint provided by variability is inherently skewed, and its power is inversely related to the sensitivity itself, discriminating most strongly between low sensitivity values and weakening substantially for higher values. It is only when the sensitivity is very low that the variability can provide a tight constraint. Our investigations take the form of “perfect model” experiments, in which we make the optimistic assumption that the model is structurally perfect and all uncertainties (including the true parameter values and nature of internal variability noise) are correctly characterised. Therefore the results might be interpreted as a best-case scenario for what we can learn from variability, rather than a realistic estimate of this. In these experiments, we find that for a moderate sensitivity of 2.5 ∘C, a 150-year time series of pure internal variability will typically support an estimate with a 5 %–95% range of around 5 ∘C (e.g. 1.9–6.8 ∘C). Total variability including that due to the forced response, as inferred from the detrended observational record, can provide a stronger constraint with an equivalent 5 %–95 % posterior range of around 4 ∘C (e.g. 1.8–6.0 ∘C) even when uncertainty in aerosol forcing is considered. Using a statistical summary of variability based on autocorrelation and the magnitude of residuals after detrending proves somewhat less powerful as a constraint than the full time series in both situations. Our results support the analysis of variability as a potentially useful tool in helping to constrain equilibrium climate sensitivity but suggest caution in the interpretation of precise results.

- Article

(1426 KB) - Full-text XML

-

Supplement

(57 KB) - BibTeX

- EndNote

For many years, researchers have analysed the warming of the climate system as observed in the modern instrumental temperature record (spanning the mid-19th to early-21st century), in order to understand the response of the climate system to external forcing. For the most part, the focus has been on the long-term energy balance as constrained by the warming trend in atmospheric and oceanic temperatures (e.g. Gregory et al., 2002; Otto et al., 2013; Lewis and Curry, 2015). However, some research has focused more specifically on the temporal variability exhibited in the surface air temperature record (Schwartz, 2007; Cox et al., 2018a), which is the focus of this paper.

Schwartz (2007) argued on the basis of a simple zero-dimensional energy balance model that an analysis based on the fluctuation–dissipation theorem (Einstein, 1905) could be used to directly diagnose the sensitivity of the Earth's climate system S – here conventionally defined as the equilibrium surface air temperature response to a doubling of the atmospheric CO2 concentration – from variability in the observed record of annually and globally averaged surface air temperature observations over the observational record. While we do not wish to repeat the arguments here, we will note that several researchers disputed this analysis, demonstrating inter alia that this method did not reliably diagnose the sensitivity of climate models and also arguing why it could not be expected to do so, given their complexity (Foster et al., 2008; Knutti et al., 2008; Kirk-Davidoff, 2009). Perhaps as a consequence of these arguments, this line of research was largely ignored for the subsequent decade.

More recently however, Cox et al. (2018a) reopened this question with an analysis based on an emergent constraint approach. That is, rather than following the directly diagnostic approach of Schwartz (2007), they instead observed that a quasi-linear relationship existed across an ensemble of CMIP5 models (Taylor et al., 2012), between the sensitivities of these models and their interannual temperature variabilities as summarised in a statistic which they denoted Ψ. It has been cogently argued that an emergent constraint should only be taken seriously if supported by some theoretical basis (Caldwell et al., 2014), and Cox et al. (2018a) did indeed present an analysis – again based on simple zero-dimensional energy balance modelling – which qualitatively underpinned this linear relationship. Using the value of Ψ obtained from observations of surface air temperature, together with the empirical relationship between Ψ and S they had derived from the climate models, they produced a best estimate of the equilibrium climate sensitivity of 2.8 ∘C with a likely (66 % probability) range of 2.2–3.4 ∘C, a substantially tighter range than most previous research. However, questions have also been raised about this result (Brown et al., 2018; Rypdal et al., 2018; Po-Chedley et al., 2018; Cox et al., 2018b).

In this paper, we explore the question of to what extent temporal variability in the globally and annually averaged temperature record can be used to constrain equilibrium climate sensitivity. We consider both the internal variability in the climate system itself and also the total variability including deviation from a linear trend due to the forced response. Our investigations are performed in the paradigm of a simple idealised modelling framework, using a two-layer energy balance model which has been widely used to simulate the climate system and which generalises and improves on the performance of the zero-dimensional model. As part of our investigations, we examine the relationship between the Ψ statistic and the equilibrium sensitivity in the model. We also show how the full time series of variability can be used to constrain climate sensitivity, under a variety of idealised scenarios. Our results are based on “perfect model” experiments and therefore may be more readily interpreted as a best-case scenario for what we can learn from variability, rather than a realistic estimate of this.

In the next section, we present the two-layer energy balance model and briefly outline the experimental methods used in this paper. We first focus on internal variability, that is to say, the temporal variability arising entirely from internal dynamics of the climate system in the absence of forcing. We evaluate the power of the Ψ statistic in constraining equilibrium sensitivity and also consider the more general question of what could in principle be learnt from the full time series. We then consider variability over the period of the observational record (primarily the 20th century but with some extension into the 19th and 21st centuries). This includes forced variability due to temporal changes in both natural and anthropogenic forcings as well as the internal variability in the climate system. Throughout the paper, the term variability refers simply to all temporal variation in the annually averaged temperature time series after any linear trend is removed.

2.1 Model

The basic underpinning of previous work is energy balance modelling of the climate system, from which it is anticipated that interannual variability may be informative regarding the equilibrium sensitivity. While previous research was based on analysis of the simplest possible zero-dimensional single-layer planetary energy balance, there is evidence that the behaviour of the climate system over the historical period is poorly modelled by such a system (e.g. Rypdal and Rypdal, 2014). Therefore, we use here a slightly more complex two-layer model based on Winton et al. (2010) and Held et al. (2010). This model has been shown to reasonably replicate the transient behaviour of the CMIP5 ensemble of complex climate models (Geoffroy et al., 2013a, b). The model is defined by the two equations:

This is a two-layer globally averaged energy balance model which simulates the mixed (Tm) and deep (Td) ocean temperature anomalies in the presence of time-varying forcing Ft. is the radiative feedback parameter; S is the equilibrium sensitivity, and F2× is the forcing due to a doubling of the atmospheric CO2 concentration. Cm and Cd are the heat capacities of the mixed-layer and deep ocean respectively, and γ represents the ocean heat transfer parameter. The parameter ϵ was introduced by Winton et al. (2010) to represent the deep-ocean heat uptake efficacy, and while it is not important for our analysis, we include it for consistency with the broader literature. In a slight modification to Winton et al. (2010), we add a noise term δt to the first equation to represent the internal variability in the system as was originally introduced in a single-layer energy balance climate model by Hasselmann (1976). Here δt is sampled on an annual (i.e. time step) basis from a Gaussian N(0,σ) where we generally use the value σ=0.05, which generates deviations of order 0.05 ∘C on an annual basis, reasonably compatible with both general circulation model (GCM) results and observations of the climate system. Our conclusions are not sensitive to this choice. The mixed-layer temperature Tm is considered synonymous with the globally averaged surface temperature. The equations are solved via the simple Euler method with a 1-year time step.

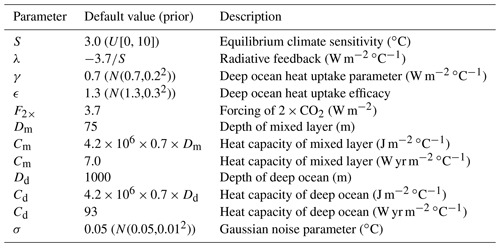

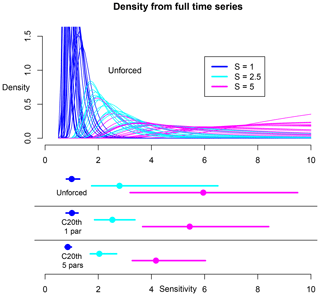

The values of the various adjustable parameters are listed in Table 1. We assume depths of 75 and 1000 m for the mixed and deep ocean layers respectively, which are used to calculate the heat capacities Cm and Cd respectively based on ocean coverage of 70 % of the planetary surface area. The default values for adjustable parameters are given in Table 1, and the values used here lie close to the mean of those obtained by fitting the model to CMIP5 simulations by Geoffroy et al. (2013a). Our aim here is not specifically to replicate or mimic this ensemble but to allow for a reasonable range of parameter values. If we set γ=0 and ignore the deep ocean, then we recover the single-layer model of Hasselmann (1976) which was used by both Schwartz (2007) and Cox et al. (2018a) in their theoretical analyses.

2.2 Bayesian estimation

Our investigations are performed within the paradigm of Bayesian estimation. In general, the Bayesian approach provides us with a way to estimate a set of unknown parameters Θ from a set of observations O via Bayes' theorem,

Here P(Θ|O) is the posterior probability distribution of Θ conditioned on a set of observations O; P(O|Θ) is the likelihood function that indicates the probability of obtaining observations O for any particular set of parameters Θ, which in this paper will always contain S and may include other parameters. P(Θ) is a prior distribution for the parameters Θ, and P(O) is the probability of the observations, which is required as a normalising constant in the calculation of the posterior probability distribution.

Formally, the value of the observations is fully summarised by the likelihood function P(O|Θ), but we primarily present our results as posterior probability density functions (pdf's) in order to provide an easily interpreted output which can be directly compared to previously published results. We therefore use a uniform prior in S as this is typically the implicit assumption in emergent constraint analyses (Williamson et al., 2019). This choice results in the posterior being visually equivalent to the likelihood even though their interpretation is somewhat different. In some experiments, we will consider that only the sensitivity is unknown, but in others we will consider a wider range of parametric uncertainties. The priors that we use for all uncertain parameters are shown in Table 1.

2.3 Additional data

While this study primarily focusses on the behaviour of the simple energy balance model, we also use and present some data from external sources. In order to perform simulations of the historical period, we force our climate model with annual time series for the major forcing factors based on IPCC (Annex II: Climate System Scenario Tables, 2013). Our two-layer model with a 1-year time step (and Euler method of numerical integration) reacts rather too strongly to short-term spikes in forcing, and thus we scale the volcanic forcing to 70 % of the nominal value in order to give more realistic simulations. We show some outputs of the model together with surface air temperature observations from HadCRUT (Morice et al., 2012) as a purely visual indication of the model's performance. We do not use these real temperature observations in any of our analyses, however.

For comparison with our simple model results, we also present some results calculated from historical simulations performed by climate models in the CMIP5 (Taylor et al., 2012) and CMIP6 (Eyring et al., 2016) ensembles. For CMIP5, we use results from 23 models obtained from the Climate Explorer website (https://climexp.knmi.nl/, last access: 20 May 2019). Where multiple simulations were performed with a single model, we show all results (amounting to 89 model runs in total), and these vary substantially due solely to the sample of internal variability in each simulation. Output from CMIP6 models was provided to the authors by Martin Stolpe. Due to the highly variable size of initial condition ensembles in this set of simulations, we limited use to at most five simulations from each model, resulting in a sample of 117 simulations from 31 models.

3.1 Using scalar measures of variability to estimate S

Schwartz (2007) and Cox et al. (2018a) both summarised the variability in the temperature record with a scalar measure that the authors argued (based on simple energy balance modelling) should be informative regarding the sensitivity. Schwartz (2007) summarised the variability via the characteristic decorrelation time constant , where Δt is an adjustable lag time, and ρΔt is the autocorrelation coefficient of the temperature time series at a time lag of Δt. The method of selecting Δt – and therefore the estimation of τ – was not presented in an entirely objective algorithmic form but for the simple one-layer climate model that was considered; the expected value of τ calculated from a long unforced time series is independent of lag. Cox et al. (2018a) argued that the function should be linearly related to the equilibrium sensitivity. In this function, ρ1 is the lag-1 autocorrelation of the time series of annual mean surface temperatures, and σT is the magnitude of interannual variability in these temperatures. Ψ and τ are closely related and covary very similarly over a wide range of sensitivity when other model parameters are held fixed (not shown). Henceforth in this section we focus solely on Ψ as it is more precisely defined and has been recently discussed in some detail (Williamson et al., 2019). However, very similar results are also obtained when equivalent experiments are performed using τ.

We now present some investigations into the relationship between Ψ and S in unforced simulations of the two-layer model introduced in Sect. 2. We perform a multifactorial experiment in which 1000-member ensembles of simulations are integrated for both 150- and 1000-year durations, over a range of S from 0 to 10 ∘C, and with γ either set to the default value of 0.7 or, alternatively, set to 0, in which case we recover the single-layer version of the model. All other model parameters are held fixed at standard values in these experiments. Since there is no forced trend in these experiments, we do not include any explicit detrending step in these analyses. However, the results are insensitive to detrending.

Figure 1 shows the results obtained when Ψ is calculated from the time series of annual mean surface temperatures produced by these simulations. For 150-year simulations using the single-layer model, there is a strong linear relationship between the mean value of Ψ obtained and the sensitivity of the model, just as Cox et al. (2018a) argued. However, Cox et al. (2018a) did not consider sampling variability, that is to say, the precision with which this expected value of Ψ might be estimated from a finite time series. As our results show, there is substantial uncertainty in the value of Ψ obtained by individual runs, and there is also strong heteroscedasticity; that is to say, the variance in each ensemble of Ψ values increases markedly with sensitivity. This variation arises from the sequence of noise terms which generate the internal variability in each simulation of the model and is therefore an intrinsic aspect of the theoretical framework relating Ψ to S. For these unforced simulations, it seems quite possible for a model with its sensitivity set to a value of 5 ∘C or even higher to generate a time series which has a modest value for Ψ of say 0.1, even though the expected value of Ψ from such model simulations would be much higher. Similarly to the results shown by Kirk-Davidoff (2009), an accurate diagnosis of Ψ could in principle be made with a sufficiently long time series of internal variability, but the sampling uncertainty only decreases with the square root of the duration of the time series (as expected from the central limit theorem), so this is unlikely to be of use in practical applications with real data.

When we consider the two-layer model using the standard parameter value of γ=0.7, the situation is a little different. In this case the relationship between sensitivity and Ψ is flatter and more curved, with the expected value of Ψ changing slowly for S>4 ∘C. The underlying explanation for this is quite simple. Any small perturbation to the surface temperature is damped on the annual timescale by a relaxation factor which varies in proportion to ϵγ−λ, and ϵγ is equal to 0.91 for standard parameter values. Therefore, when S is large, changes in have relatively little impact on the total damping, and thus both the magnitude and autocorrelation of variability are relatively insensitive to further increases in S. Williamson et al. (2019) also presented a theoretical analysis of this two-layer model in which it was argued that the response of Ψ was close to linear across the GCM parameter range, and our result confirms this for sensitivity values from around 2 to 4 or even 5 ∘C. However, the gradual curvature for larger values results in a saturation of the response of Ψ to increases in S, and this, together with the increasing sampling uncertainty, has consequences that will be shown in subsequent experiments. In fact the relationship between Ψ and the transient climate response (i.e. the warming observed at the time of CO2 doubling under a 1 % per annum increase) is more close to linear than the relationship between Ψ and S. Thus our work does not challenge the underlying analysis that they presented but augments it with additional details.

Figure 1Ψ estimated from 150-year time series for one- and two-layer models. Grey results are for single-layer model, and black results are for two-layer model. Large dots show means of 1000 simulations, with error bars indicating ±2 SD ranges for each ensemble. Results are calculated at each integer value of sensitivity and offset slightly for visibility.

We now directly consider the question of how useful an observed value Ψo can be as a constraint on the equilibrium climate sensitivity through Bayesian estimation. It is not trivial to directly calculate the exact value of the likelihood P(Ψo|Θ) for a given observed value Ψo, as Ψ is itself a random variable arising from the stochastic model and thus depends on the sequence of random perturbations that were generated during the numerical integration of the model. Therefore, we use here the technique known as approximate Bayesian computation (Diggle and Gratton, 1984; Beaumont et al., 2002). This is a rejection-based sampling technique in which samples are drawn from the prior distribution, used to generate a simulated temperature time series, and rejected if the value of Ψ calculated from this time series does not lie within a small tolerance of the observed value. The set of accepted samples then approximately samples the desired posterior. We have no observations of long periods of unforced climate variability with the real climate system, so we perform a number of synthetic tests in which different hypothetical values for Ψo are tested.

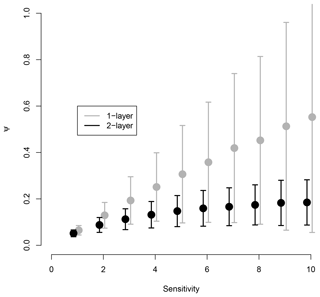

Figure 2Posterior estimates of sensitivity inferred by using observations of Ψ to constrain parameters in the two-layer model. Top panel: four solid-line pdf's in blue, cyan, magenta, and green represent estimates based on 150-year unforced simulations, assuming observations of Ψo = 0.05, 0.1, 0.15, and 0.2 respectively, where only S is uncertain with uniform prior. Dashed blue line represents posterior estimate for Ψo = 0.05 with additional parametric uncertainties as described in Sect. 3.2. Horizontal lines and dots in “Unforced” central panel indicate 5 %–95 % ranges and median respectively of these experiments. Horizontal lines and dots labelled as “Forced (20th century)” are similar results based on forced simulations of historical period as described in Sect. 4.1. Solid lines: only S is uncertain. Dashed lines: multiple uncertain parameters.

Our experiments take the form of a perfect model scenario, where the model is assumed to be a perfect representation of the system under consideration, with no structural imperfections. Our uncertainties here are due solely to unknown parameter values and internal variability noise. In these experiments, we assume that Ψ for the true system is calculated from a 150-year temperature time series of the unforced system, without any observational error whatsoever. The results of four experiments – using values of Ψo which range from 0.05 to 0.2 in regular increments – are shown in Fig. 2. There is not necessarily an immediate correspondence between these synthetic values and the observationally derived value that Cox et al. (2018a) calculated, as we are using unforced model simulations here. Nevertheless, the results are qualitatively interesting. With other model parameters set to the default values, the four values of Ψo used here correspond to the expected value generated by 150-year integrations with sensitivities of approximately 1, 2.5, 5, and 10 ∘C respectively. The figure shows that in this experimental scenario, Ψ can only provide a tight constraint in the first case where the sensitivity is very low. In this case, the 5 %–95 % range of the posterior is an impressively narrow 0.6–1.7 ∘C. For the case Ψo=0.1, the equivalent probability interval is 1.8–8.1 ∘C, and for higher values of Ψ the posterior is very flat indeed with just the very lowest values of S excluded. Similar results are obtained when equivalent values of τ are used as observational constraints.

Strictly, when considering the strength of the constraint obtained from the variability, we should focus on the likelihood P(Ψ|S) rather than the posterior pdf P(S|Ψ), since the latter depends also on the prior, which is in principle independent of the observations. The likelihood for different values of S, which tells us the relative probability of any particular sensitivity value generating the observation, can be directly read off from Fig. 2 as the height of the appropriate density curve at the specific sensitivity value. In the experiment performed with Ψo=0.1, the maximum likelihood value is achieved at a value of S=2.5 ∘C, and the likelihood drops by a factor of 10 at both S=1.3 and S=7.9 ∘C. Kass and Raftery (1995) suggest that a likelihood ratio of 10 or more between two competing hypotheses could be taken to represent “strong” evidence in favour of one over the other, so if we adopt this linguistic calibration we could say that the observation of Ψo=0.1 represents strong evidence in favour of S=2.5 ∘C versus all values outside of the range 1.3–7.9 ∘C (but conversely, does not represent strong evidence to discriminate between any pair of values inside that range). It is somewhat coincidental that this range seems quite similar to the 5 %–95 % range of the posterior pdf as the philosophical interpretation of the ranges is rather different. There is a strong skew in this range, which extends much further towards higher values of S than lower ones, compared to the maximum likelihood estimate. We stress that this skew is a fundamental property of the physical model and is not due to the Bayesian analysis paradigm.

3.2 Additional uncertainties

The pdf's plotted in the top panel of Fig. 2 assume that all model parameters other than S are known with certainty. In reality, we have significant uncertainty as to what values we should assign to several other parameters. We consider just three of these: the ocean heat uptake parameter γ, the efficacy or pattern effect parameter ϵ, and the internal noise parameter σ. Geoffroy et al. (2013a) fitted the two-layer model to various GCM outputs in order to estimate parameter values including γ and ϵ, and based on these results we use as priors for these parameters the distributions N(0.7, 0.2) and N(1.3,0.3) respectively, which generously encapsulate their results. Geoffroy et al. (2013a) did not consider internal variability, and thus we do not have such a solid basis for a prior in σ and assume a comparable relative uncertainty of 20 %, i.e. a prior of N(0.05, 0.01). When we repeat the previous experiments but include these additional parametric uncertainties, then for the experiment where we use Ψo=0.05 as a constraint, the posterior for S widens substantially from the previous spread of 0.6–1.7 ∘C, to 0.6–4.9 ∘C as also shown as the dashed blue line in Fig. 2. The largest factor generating this substantial increase in uncertainty is due to the uncertainty in σ. The equivalent posteriors using the larger observational values for Ψo also broaden somewhat, but this is less visible in the results as they are of course always constrained by the prior range.

3.3 Using the full time series

Although the results in Fig. 2 show that an observation of Ψ taken from a short unforced simulation cannot tightly constrain equilibrium sensitivity in this model (except perhaps in the most exceptional of circumstances), it could still be hoped that a more precise constraint could possibly be gleaned by a more advanced analysis that uses some different diagnostic of the time series. In this section, we show how the total information of the time series can be used. By doing this, we create the most optimistic possible scenario for using internal variability to constrain equilibrium sensitivity of this simple climate model.

This approach requires us to calculate the likelihood for the full set of observations, P(O|Θ), where here , i=1 … N, is the full time series of annual surface temperature anomalies. Once the model parameters and forcing are prescribed, the time series of surface temperature anomalies is uniquely determined by the series of random noise perturbations δi. Thus, in the absence of observational error, we can invert this calculation to calculate (up to machine precision) the sequence of annual random noise perturbations δi, i=1 … N that are required in order to replicate any given observed temperature time series. This is why we selected a model time step as long as 1 year, as it results in the number of observations being as large the number of noise terms making this exact inversion possible. The probability of the model (with a particular set of parameters) generating the observed sequence is exactly the probability of the required noise sequence being sampled. This value is readily calculated, since the joint density of these independent and identically distributed δi is simply the product of their individual densities. This unusual approach, which we do not believe has been previously implemented in this context, is possible here as we are assuming zero observational uncertainty. With this exact likelihood calculation, the Bayesian estimation process is straightforward. In the case where only sensitivity is considered uncertain, it can be performed by direct numerical integration, sampling the sensitivity on a fine regular grid and calculating the likelihood (and therefore posterior) directly at these values.

It is worth emphasising that this calculation represents an absolute best-case scenario for using the time series of temperature anomalies as a constraint. There can be no diagnostic or statistical summary of the observations that provides more information than the full set of observations themselves contain. Thus, we cannot hope to obtain a better constraint by some alternative analysis of the temperature time series.

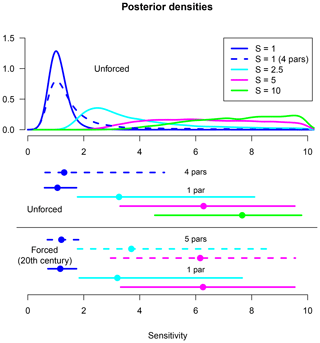

Figure 3Posterior estimates for the climate sensitivity from Bayesian estimation using the full time series of annual mean surface temperatures. Main plot: results from 150-year unforced simulations as discussed in Sect. 3.3. Twenty replicates are performed for each true sensitivity of 1, 2.5, and 5 ∘C as indicated by the colour blue, cyan, and magenta respectively. Horizontal lines and dots immediately below top panel show means of the 5 %–95 % range and median of each set of results. Horizontal lines labelled “C20th” show analogous results using simulations of historical period, with only S uncertain or with five uncertain parameters as discussed in Sect. 4.2.

Figure 3 shows results obtained from this approach, in the case where only S is considered uncertain. To aid visual comparability with Fig. 2, the y-axis scale is fixed at the same value despite cutting the peaks of some pdf's. It is not possible to define what a “typical” noise sequence might look like, and therefore we plot 20 replications with different randomly generated instances of internal variability for each sensitivity value tested. It seems that the results may be a little more precise than was obtained using Ψ alone (as shown by the pdf's generally having higher peak densities), though this depends on the specific sample of internal variability that was obtained. It is still only in the case of the lowest sensitivity value of 1 ∘C that we reliably obtain a tight constraint. With the true sensitivity of 2.5 ∘C, the posterior 5 %–95 % range, averaged over the samples, is 1.9–7.0 ∘C, marginally narrower than the 1.8–8.1 ∘C range obtained previously when an equivalent Ψ value of 0.1 was used. When additional parametric uncertainties are considered in this unforced scenario, the constraints again weaken, though not to quite such an extent as in Sect. 3.2 when only Ψ is used as a constraint. We do not show these results here.

Thus, there appears to be the potential for internal variability, as represented by the full temperature time series, to provide a slightly better constraint than that obtained by a summary statistic alone, but the improvement is marginal, and even our optimal calculation, which uses the exact likelihood of the full time series, cannot accurately diagnose equilibrium sensitivity except when the true value is very low. These results again show a skew similar to that obtained when Ψ was used as the constraint in Sect. 3.1 and 3.2. Thus this non-Gaussian likelihood is again an inherent property of the physical model and not an artefact of the analysis. We mention again that these calculations are made under the three optimistic assumptions that (a) the model is perfect and we have exact knowledge of all other model parameters, (b) we know the forcing to be zero over this time period, and (c) there is no observational error.

While the theoretical underpinning of Cox et al. (2018a) was originally based on the properties of unforced internal variability, Cox et al. (2018b) acknowledged that their approach may have benefited from some signature of forced variability entering into their calculations. In order to calculate their Ψ statistic, they applied a windowed detrending method in order to focus on variability of both model simulations and observations of the historical period. However, the window length of 55 years that they used was justified primarily in empirical terms and cannot remove shorter-term variations in forced response.

In this section, we perform a series of analyses based on historical forced simulations, in order to investigate more fully the potential for such forced effects to improve the constraint. We force the climate model with annual time series for the major forcing factors based on IPCC (Annex II: Climate System Scenario Tables, 2013). Our two-layer model with a 1-year time step (and Euler method of numerical integration) reacts rather too strongly to short-term spikes in forcing, and thus we scale the volcanic forcing to 70 % of the nominal value in order to give more realistic simulations. In some of the following experiments, we consider aerosol forcing as a source of uncertainty in addition to that arising from the internal parameters of the model. This uncertainty is implemented via a scaling factor denoted by α, which is uncertain but constant in time, applied to the original aerosol forcing time series. In these cases, our prior distribution for α is N(1,0.52).

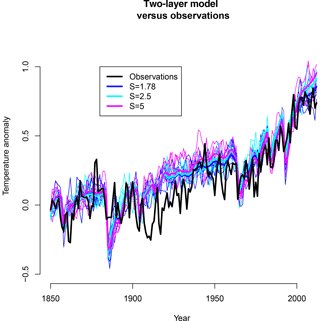

Figure 4Simulations of instrumental period with two-layer model. Thick lines are forced response excluding internal variability; thin lines are five replicates of each parameter set including internal variability. Blue lines: S=1.78 ∘C, γ=0.7 W m−2 ∘C−1, ϵ=1.3. Cyan lines: S=2.5 ∘C, γ=1.0 W m−2 ∘C−1, ϵ=1.7. Magenta lines: S=5 ∘C, γ=1.0 W m−2 ∘C−1, ϵ=1.7, α=1.7. Black line is HadCRUT data.

Figure 4 presents 18 simulations from the model, consisting of five instances of internal variability for each of three different parameter sets, which were chosen to give reasonable agreement with observational data, and additionally one simulation for each of these parameter sets in which internal variability was not included in order to show the pure forced response. These simulations are shown merely to indicate the typical behaviour of the model under historical forcing estimates and are not directly relevant to our analyses. Note that the observations of the real climate system which are also plotted here include observational error (estimated to be roughly ±0.05 ∘C at the 1 standard deviation level), whereas the model output is presented as an exact global temperature. Thus it is to be expected that the model results are somewhat smoother and less variable than the observations, although it may also be the case that the two-layer model has insufficient variability. For each of these three simulations without internal variability, the RMS differences between model output and observations is 0.13 ∘C.

When we hold other parameters at default levels, best agreement between model and data (defined here simply by RMS difference between the two time series) is achieved for a rather low sensitivity of 1.78 ∘C. If the γ and ϵ parameters are increased slightly above their defaults, then we can achieve an equally good simulation (again as measured by RMS difference) with a higher value for sensitivity of 2.5 ∘C. If, additionally, aerosol forcing is also increased above the default value, then a higher sensitivity still of 5 ∘C achieves an equally good match to the observed temperature time series at the global scale. While there are hemispheric differences in these forcings that may provide some additional information (Aldrin et al., 2012), these simulations help to illustrate why it has been so challenging to effectively constrain equilibrium sensitivity from the long-term observed warming.

4.1 Using Ψ

In order to assess what we can learn about sensitivity from the variability in historical temperature observations, we first consider the utility of Ψ calculated from simulations over this period. Cox et al. (2018a) proposed that the effect of forcing over this interval could be effectively removed by a process of windowed detrending. Figure 5 shows results analogous to the two-layer simulations in Fig. 1 but using forced simulations of the historical period rather than unforced control simulations and, therefore, with Ψ calculated via the windowed detrending method of Cox et al. (2018a). One very minor discrepancy with the calculations presented in that paper is that our simulations only extend to 2012 (this being the limit of the forcing time series that we are using), and therefore we omit the last 4 years of the time period that they used. This does not significantly affect any of our results. We do not consider one-layer simulations here as this version of the model is known to provide poor simulations of historical changes (Rypdal and Rypdal, 2014).

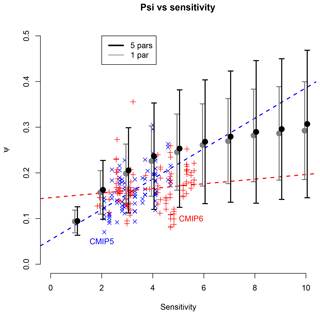

Figure 5Ψ estimated from historical simulations from two-layer model. Grey results are based on simulations where only S is considered uncertain. Black results additionally account for uncertainty in γ, ϵ, σ, and α. Large dots show means of 1000 simulations, with error bars indicating ±2 SD ranges for each ensemble. Blue and red crosses indicate results generated by CMIP5 and CMIP6 models respectively, together with the best-fit regression lines as dashed lines, in matching colours.

Grey dots and error bars indicate results obtained when only S is considered uncertain. These results are qualitatively similar to those obtained by the unforced two-layer simulations in Fig. 1, in that they exhibit a nonlinear and heteroscedastic relationship that levels off for large values of S. The values of Ψ obtained at each value of S are however somewhat larger in the forced experiments, which supports the claims of Brown et al. (2018) and Po-Chedley et al. (2018) that the windowed detrending has not been wholly effective in eliminating all influence of forcing. As mentioned previously, analysis of GCM outputs indicate significant uncertainty in other model parameters, and therefore we have performed additional ensembles of simulations which account for uncertainty both in model parameters and aerosol forcing. These uncertain parameters – and their priors – are the same as in Sect. 3.2, with the addition here that we also consider uncertainty in the aerosol forcing through the scaling parameter α. The combined effect of these uncertainties has little systematic effect on the mean estimate of Ψ but slightly increases the ensemble spread. Interestingly, in contrast to our earlier experiments, no single factor appears to have a dominant effect here.

Blue and red crosses also shown in Fig. 5 show results obtained from the CMIP5 and CMIP6 ensembles. The CMIP models appear to generate slightly lower values of Ψ than the two-layer model does with the same sensitivity, although the results seem broadly compatible. Reasons for the difference may include biases in parameters of the two-layer model, structural limitations, or differences in the forcings used. Although we are not replicating the emergent constraint approach here, we do note that there is a significant correlation in the CMIP5 ensemble results shown here between their sensitivities and Ψ values. However, the relationship seen here is weaker than that obtained by Cox et al. (2018a) for a different (though overlapping) set of models and explains a lower proportion of the variance. This remains true whether we perform the regression with S as predictor, as suggested by our figure, or when using Ψ as predictor as in Cox et al. (2018a). For CMIP6, the correlation is insignificant.

Figure 2 shows results generated when various hypothetical values for Ψo are used to constrain model parameters for historical simulations. As before, we test sensitivity values of 1, 2.5, and 5 ∘, which here correspond to values for Ψo of 0.1, 0.18, and 0.25 respectively. As in the previous experiments, the smallest value of Ψo generates a tight constraint with a 5 %–95 % range of 0.7–1.7 ∘C when only S is considered uncertain. This grows to 1.8–7.2 ∘C for Ψo=0.18, and the larger value of Ψo=0.25 provides very little constraint. When the additional parametric and forcing uncertainties are considered, the tightest range corresponding to Ψo=0.1 grows a little to 0.7–2.0 ∘C, and the Ψo=0.18 case spreads to 1.8–8.8 ∘C.

When we use the observational value of Ψo=0.13 (calculated from HadCRU data) and include multiple parametric uncertainties, the 17 %–83 % posterior range is 1.3–2.7 ∘C, and the 2.5 %–97.5 % range is 0.9–5.1 ∘C. These ranges are somewhat larger than the equivalent results presented by Cox et al. (2018a), which were 2.2–3.4 ∘C and 1.6–4.0 ∘C respectively, despite the highly optimistic perfect model scenario considered here.

4.2 Using the full time series

Finally, we repeat the approach of Sect. 3.3 and use the full information of the time series, by the same method of inverting the model to diagnose the internal variability noise that is required to generate the observed temperature time series. Since we are interested solely in variability, we only consider the temperature residuals after detrending. We use a simple linear detrending over the period 1880–2012, which will leave a signature primarily due both to volcanic events and also the contrasting temporal evolution of negative aerosol forcing and positive greenhouse gas forcing, which both generally increase throughout this period but which exhibit different multidecadal patterns.

The calculation is similar to that of Sect. 3.3, but there are some minor details which are worth mentioning. Although the detrending is performed over the interval 1880–2012, we initialise the simulations in 1850 to allow for a spin-up. In contrast to Sect. 3.3 where detrending was not performed, knowledge of the residuals after detrending does not actually enable an exact reconstruction of the internal variability in the model simulation, as any random trend in this internal variability will have been removed by detrending. In fact a whole family of different model simulations will be aliased onto the same residuals. Therefore, our inversion only truly calculates the noise perturbations after the removal of the component that generates any linear trend. This is not a significant problem for the likelihood calculation as the effect of this aliasing is minor, and its dependence on model parameters is negligible.

The results of multiple replicates are shown in Fig. 3, in which we consider first the case where only S is uncertain and then our larger set of parametric uncertainties. In the case where only S is unknown, the full time series of detrended residuals provides strong evidence on S, which can as a result be tightly constrained except perhaps when it takes a high value such as 5 ∘C. For S=2.5 ∘C, the posterior 5 %–95 % range is typically under 2 ∘C in width, with the average of our samples being 1.9–3.5 ∘C. When multiple uncertainties are considered, the constraint is however markedly weakened. For a true sensitivity of S=2.5 ∘C, the posterior 5 %–95 % range is typically over 4∘, at 1.6–6.0 ∘C, with a “likely” 17 %–83 % range of 2.2–4.1 ∘C. As in the unforced experiments, these optimal constraints appear somewhat narrower than can be obtained by using the Ψ statistic but are not necessarily tight enough to be compelling in themselves.

We have explored the potential for using interannual temperature variability in estimating equilibrium sensitivity. While – as Williamson et al. (2019) argued – there is generally a quasi-linear relationship between S and the expected value of over a reasonable range of S in the simple energy balance model, this relationship saturates for higher S, and furthermore, sampling variability is significant and highly heteroscedastic. These properties undermine the theoretical basis for the linear regression emergent constraint approach which was presented by Cox et al. (2018a), as the ordinary least squares regression method relies on a linear relationship with homoscedastic errors. The behaviour of the model instead results in an inherently skewed likelihood P(Ψ|S) with a long tail to high values for S. Thus, while the Ψ statistic can indeed be informative on S, the constraint it provides based on internal variability in the case of unforced simulations is rather limited. Furthermore, the CMIP5 and CMIP6 ensembles exhibit quite different relationships in the regression framework, suggesting a lack of robustness of the original analysis. We have shown how it is possible in principle to extract the full information from time series of annual temperatures, by calculating the exact likelihood for the complete set of these observations. However, even in this scenario of a perfect model with a few well-characterised parametric uncertainties and no observational uncertainty on the temperature time series, the constraint on sensitivity is seriously limited by the variability inherent to the model. It is only in the case where the true value of the sensitivity is very low that such an approach can generate a tight constraint. For example, if the true sensitivity takes a moderate value of 2.5 ∘C, then we could only expect to generate a constraint with a typical 5 %–95 % range of around 1.9–6.8 ∘C. As was the case when using Ψ, estimates generated from the full time series are rarely close to symmetric and instead are typically skewed with a long tail to high values. This skew is an inherent property of the physical model that defines the likelihood and not an artefact of our analysis methods.

Forced variability, such as that occurring during the instrumental period, does provide additional information in our experiments, and therefore we could in theory hope to calculate a narrower posterior range, with a typical width of around 4 ∘C (e.g. 1.8–6.0 ∘C) when the true sensitivity is 2.5 ∘C. It must however be emphasised that these calculations rely on very optimistic assumptions and therefore represent a best case that is unlikely to be realised in reality. Nevertheless, our results do suggest that variability can inform on the sensitivity and may generate a useful constraint in addition to that arising from the longer-term observed trend.

Data and code to reproduce all figures and analysis are included in the Supplement.

The supplement related to this article is available online at: https://doi.org/10.5194/esd-11-709-2020-supplement.

JDA and JCH undertook the research and wrote the article. TS and BS contributed to the conception of the study and provided critical feedback to the research and article.

The authors declare that they have no conflict of interest.

We would like to thank the editor and two reviewers for their helpful comments.

This research was supported by the MPI-Hamburg. Thorsten Mauritsen was also supported by the European Research Council (ERC) (grant no. 770765) under the European Commission Horizon 2020 research and innovation programme (grant no. 820829).

This paper was edited by Steven Smith and reviewed by two anonymous referees.

Aldrin, M., Holden, M., Guttorp, P., Skeie, R. B., Myhre, G., and Berntsen, T. K.: Bayesian estimation of climate sensitivity based on a simple climate model fitted to observations of hemispheric temperatures and global ocean heat content, Environmetrics, 23, 253–271, https://doi.org/10.1002/env.2140, 2012. a

Beaumont, M. A., Zhang, W., and Balding, D. J.: Approximate Bayesian computation in population genetics, Genetics, 162, 2025–2035, 2002. a

Brown, P. T., Stolpe, M. B., and Caldeira, K.: Assumptions for emergent constraints, Nature, 563, E1–E3, https://doi.org/10.1038/s41586-018-0638-5, 2018. a, b

Caldwell, P. M., Bretherton, C. S., Zelinka, M. D., Klein, S. A., Santer, B. D., and Sanderson, B. M.: Statistical significance of climate sensitivity predictors obtained by data mining, Geophys. Res. Lett., 41, 1803–1808, 2014. a

Cox, P. M., Huntingford, C., and Williamson, M. S.: Emergent constraint on equilibrium climate sensitivity from global temperature variability, Nature, 553, 319–322, 2018a. a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p

Cox, P. M., Williamson, M. S., Nijsse, F. J., and Huntingford, C.: Cox et al. reply, Nature, 563, E10–E15, https://doi.org/10.1038/s41586-018-0641-x, 2018b. a, b

Diggle, P. J. and Gratton, R. J.: Monte Carlo methods of inference for implicit statistical models, J. Roy. Stat. Soc. B Met., 46, 193–212, 1984. a

Einstein, A.: Über die von der molekularkinetischen Theorie der Wärme geforderte Bewegung von in ruhenden Flüssigkeiten suspendierten Teilchen, Ann. Phys-Berlin, 322, 549–560, 1905. a

Eyring, V., Bony, S., Meehl, G. A., Senior, C. A., Stevens, B., Stouffer, R. J., and Taylor, K. E.: Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization, Geosci. Model Dev., 9, 1937–1958, https://doi.org/10.5194/gmd-9-1937-2016, 2016. a

Foster, G., Annan, J. D., Schmidt, G. A., and Mann, M. E.: Comment on “Heat capacity, time constant, and sensitivity of Earth's climate system” by S. E. Schwartz, J. Geophys. Res., 113, D15102, https://doi.org/10.1029/2007JD009373, 2008. a

Geoffroy, O., Saint-Martin, D., Bellon, G., Voldoire, A., Olivié, D., and Tytéca, S.: Transient climate response in a two-layer energy-balance model. Part II: Representation of the efficacy of deep-ocean heat uptake and validation for CMIP5 AOGCMs, J. Climate, 26, 1859–1876, 2013a. a, b, c, d

Geoffroy, O., Saint-Martin, D., Olivié, D. J., Voldoire, A., Bellon, G., and Tytéca, S.: Transient climate response in a two-layer energy-balance model. Part I: Analytical solution and parameter calibration using CMIP5 AOGCM experiments, J. Climate, 26, 1841–1857, 2013b. a

Gregory, J. M., Stouffer, R. J., Raper, S. C. B., Stott, P. A., and Rayner, N. A.: An observationally based estimate of the climate sensitivity, J. Climate, 15, 3117–3121, 2002. a

Hasselmann, K.: Stochastic climate models, Tellus, 28, 473–485, 1976. a, b

Held, I. M., Winton, M., Takahashi, K., Delworth, T., Zeng, F., and Vallis, G. K.: Probing the fast and slow components of global warming by returning abruptly to preindustrial forcing, J. Climate, 23, 2418–2427, 2010. a

IPCC: Annex II: Climate System Scenario Tables, in: Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, edited by: Stocker, T., Qin, D., Plattner, G.-K., Tignor, M., Allen, S., Boschung, J., Nauels, A., Xia, Y., Bex, V., and Midgley, P., Cambridge University Press, Cambridge, United Kingdom, and New York, NY, USA, book section AII, 1395–1446, https://doi.org/10.1017/CBO9781107415324.030, 2013. a, b

Kass, R. and Raftery, A.: Bayes factors, J. Am. Stat. Assoc., 90, 773–795, https://doi.org/10.1080/01621459.1995.10476572, 1995. a

Kirk-Davidoff, D. B.: On the diagnosis of climate sensitivity using observations of fluctuations, Atmos. Chem. Phys., 9, 813–822, https://doi.org/10.5194/acp-9-813-2009, 2009. a, b

Knutti, R., Krähenmann, S., Frame, D. J., and Allen, M. R.: Comment on “Heat capacity, time constant, and sensitivity of Earth's climate system” by S. E. Schwartz, J. Geophys. Res., 113, D15103, https://doi.org/10.1029/2007JD009473, 2008. a

Lewis, N. and Curry, J. A.: The implications for climate sensitivity of AR5 forcing and heat uptake estimates, Clim. Dynam., 45, 1009–1023, 2015. a

Morice, C. P., Kennedy, J. J., Rayner, N. A., and Jones, P. D.: Quantifying uncertainties in global and regional temperature change using an ensemble of observational estimates: The HadCRUT4 data set, J. Geophys. Res.-Atmos., 117, D08101, https://doi.org/10.1029/2011jd017187, 2012. a

Otto, A., Otto, F. E. L., Boucher, O., Church, J., Hegerl, G., Forster, P. M., Gillett, N. P., Gregory, J., Johnson, G. C., Knutti, R., Lewis, N., Lohmann, U., Marotzke, J., Myhre, G., Shindell, D., Stevens, B., and Allen, M. R.: Energy budget constraints on climate response, Nat. Geosci., 6, 415–416, https://doi.org/10.1038/ngeo1836, 2013. a

Po-Chedley, S., Proistosescu, C., Armour, K. C., and Santer, B. D.: Climate constraint reflects forced signal, Nature, 563, E6–E9, https://doi.org/10.1038/s41586-018-0640-y, 2018. a, b

Rypdal, M. and Rypdal, K.: Long-memory effects in linear response models of Earth’s temperature and implications for future global warming, J. Climate, 27, 5240–5258, 2014. a, b

Rypdal, M., Fredriksen, H.-B., Rypdal, K., and Steene, R. J.: Emergent constraints on climate sensitivity, Nature, 563, E4–E5, https://doi.org/10.1038/s41586-018-0639-4, 2018. a

Schwartz, S. E.: Heat capacity, time constant, and sensitivity of Earth's climate system, J. Geophys. Res., 112, D24S05, https://doi.org/10.1029/2007JD008746, 2007. a, b, c, d, e, f

Taylor, K. E., Stouffer, R. J., and Meehl, G. A.: An overview of CMIP5 and the experiment design, B. Am. Meteorol. Soc., 93, 485–498, 2012. a, b

Williamson, M. S., Cox, P. M., and Nijsse, F. J.: Theoretical foundations of emergent constraints: relationships between climate sensitivity and global temperature variability in conceptual models, Dynamics and Statistics of the Climate System, 3, 1–14, https://doi.org/10.1093/climsys/dzy006, 2019. a, b, c, d

Winton, M., Takahashi, K., and Held, I. M.: Importance of ocean heat uptake efficacy to transient climate change, J. Climate, 23, 2333–2344, 2010. a, b, c